New Features for Administrators course introduces administrators to new or enhanced features and options. The course consists of two parts. The second part of the course presents five modules : security, data availability, sectioning, data information lifecycle, database operations execution monitoring, performance management.

The program :

- Unified Auditing – Universal Unified Auditing

- New privileges to control data access

- Privilege analysis

- Data Redaction – masking/hiding data

- New features to ensure high availability of data

- Online moving of data files

- Improvement and development of Flashback Data Archive option

- Enhancements in Recovery Manager to provide high availability of data

- Improvements in sectioning

- Heat Map and Automatic Data Optimization – automatic optimization of data lifecycle maintenance

- Real-Time Database Operation – monitoring of operations execution

- New options to increase SQL command performance: Database In-Memory, Full Database In-Memory Caching, Automatic Big Table Caching.

- New options of optimizer: Adaptive Execution Plans, SQL Plan Directives, new types of histograms, extended statistics, Adaptive SQL Plan Management

- Real-Time ADDM

- Compare Period ADDM

- Improvement of Real Application Testing option

- Other improvements in database performance tuning

Recognized and trusted by the best

Choose your best team and win with Parimatch

For an extensive selection of top-notch games paired with incredible offers, MyStake Casino is becoming the go-to choice for many online gambling enthusiasts.

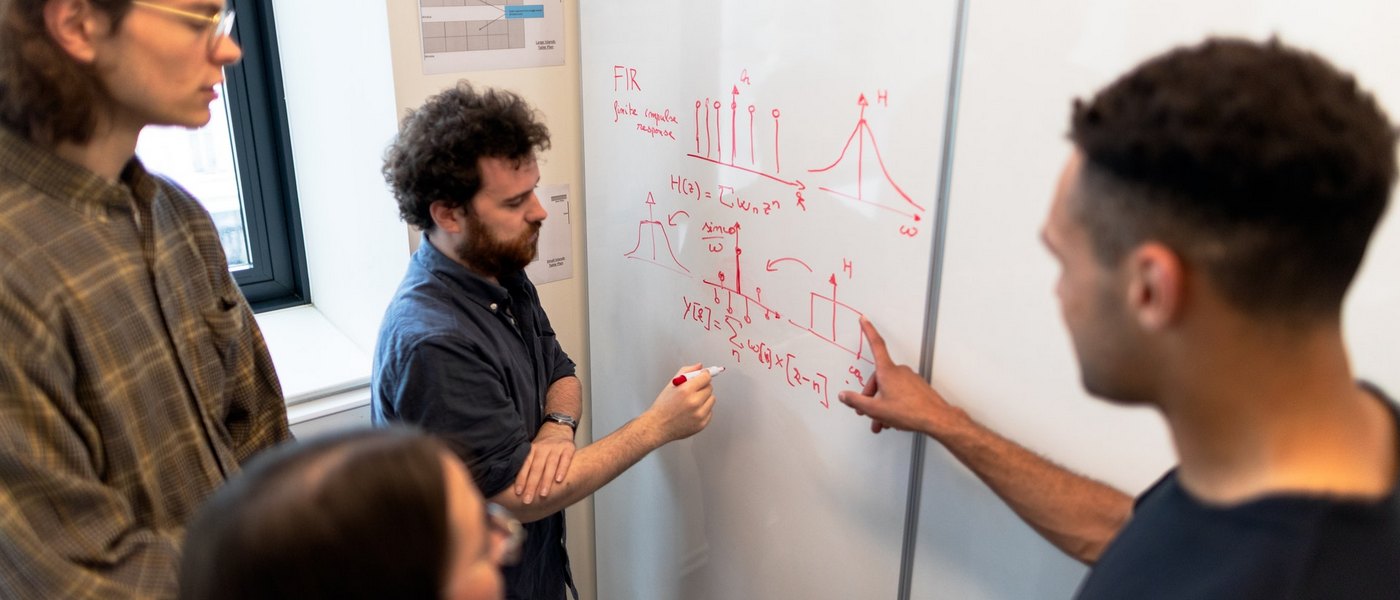

Solution engineering involves the process of designing and developing comprehensive solutions to address complex problems or challenges faced by businesses or organizations. This interdisciplinary approach combines elements of engineering, technology, and creativity to create effective and innovative solutions that meet specific needs and objectives.

Your Website Stay Online No Matter What: DMCA, DDoS, Freedom of Speach Violations, Government Regulations

KryptoCasino.me is the ideal source for crypto gambling. Claim your welcome offer today.

Which casino online is the best for you to play at – Hungarian experts from LegjobbKaszino will help you to understand this issue, as they provide detailed and up-to-date reviews of all the most popular online casino brands on their website

Welcome to the future of video translation! Are you reaching all the audiences you could be? With our cutting-edge video translation tool, empowered by the latest advancements in artificial intelligence, your content knows no borders. Say goodbye to language barriers as we offer seamless translation into 130+ languages, allowing your videos to resonate with viewers around the globe.

SEO.Casino – #1 Casino SEO Services – SEO for iGaming Websites, sports betting sites, and poker seo.

News